Description

Back to topAlgebraic statistics is a branch of mathematical statistics that focuses on the use of algebraic, geometric and combinatorial methods in statistics. The workshop will focus on three themes: (1) modeling environmental and ecological systems so that we can better understand the effects of climate change on these systems (2) reimaging urban development and economic systems to address persistent inequity in daily living activities and (3) providing theoretical underpinnings for statistical learning techniques to understand the implications of widespread use and for easy adaptation to novel applications.

These themes emerged in the Fall 2023 long program “Algebraic Statistics and our Changing World” hosted at IMSI. As the next chapter in the interdisciplinary field of algebraic statistics, this workshop is an exciting opportunity to expand on these themes. All participants interested in algebraic statistics are welcome.

Funding

Priority funding consideration will be given to those to register by May 20, 2025. Funding is limited.

Organizers

Back to topSpeakers

Back to topSchedule

Speaker: Serkan Hosten (San Fransisco State University)

Speaker: Hanbaek Lyu (University of Wisconsin, Madison)

Speaker: Yulia Alexandr (University of California, Lo Angeles (UCLA))

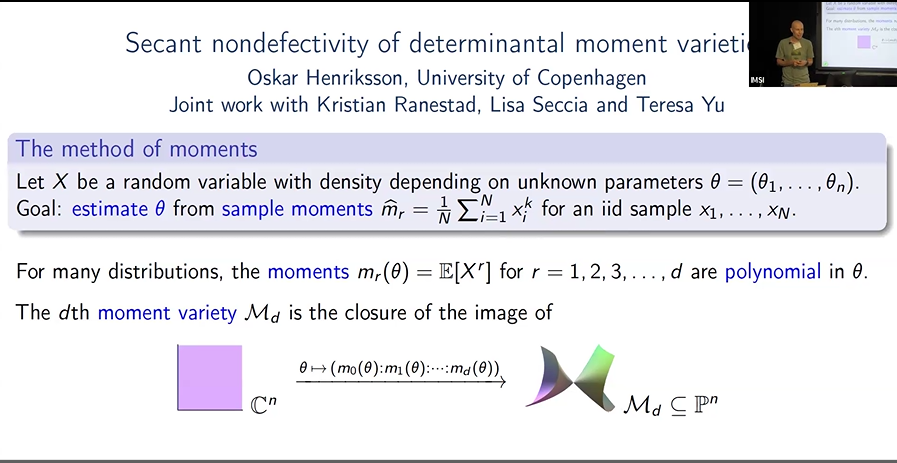

Oscar Henriksson (University of Copenhagen) Adisa Saka (OBAFEMI AWOLOWO UNIVERSITY) Udani Ranasighe (University of Hawaii) Bella Finkel (University of Wisconsin) Francisico Ponce-Carrion (North Carolina State University) Yaoying Fu ( Boston College) Ikenna Nometa (University of Hawaii at Manoa) Bryson Kagy (North Carolina State University (NCSU)

Speaker: Shelby Cox (University of Michigan)

Speaker: Álvaro Ribot (Harvard University)

Speaker: Nicolette Meshkat (Santa Clara University)

Speaker: Depdeep Pati (University of Wisconsin-Madison)

Speaker: Aida Maraj (Max Planck Institute of Molecular Cell Biology and Genetics)

Speaker: Julia Lindberg (University of Texas at Austin)

Speaker: Hector Baños (California State University, San Bernadino (CSU San Bernardino))

Speaker: Elina Robeva (University of British Columbia)

Speaker: Cash Bortner (California State University, Stanislaus (CSU Stanislaus))

Speaker: Danai Deligeorgaki (KTH)

Speaker: Henry Schenck (Auburn University)

Speaker: Seth Sullivant (North Carolina State University)

Speaker: Roser Homs Pons (CRM (Centre de Recerca Matemà tica))

Speaker: Max Hill (University of Hawaii, Manoa)

Speaker: Carlos Amendola (Technische Universität Berlin)

Videos

A unified look at contingency tables optimal transport and Schrödinger bridge

Hanbaek Lyu

July 21, 2025

Identifiability, indistinguishability, and other problems in biological modeling

Nicolette Meshkat

July 22, 2025

Identifiability in Phylogenetic Networks under the Coalescent: From Simpler to Broader Classes

Hector Baños

July 23, 2025

Semialgebraic Hypothesis Testing with Incomplete U-Statistics: A Case Study with Biologically-Motivated Models

Max Hill

July 25, 2025